TCP/IP协议栈在Linux内核中的运行时序分析

- 在深入理解Linux内核任务调度(中断处理、softirg、tasklet、wq、内核线程等)机制的基础上,分析梳理send和recv过程中TCP/IP协议栈相关的运行任务实体及相互协作的时序分析。

- 编译、部署、运行、测评、原理、源代码分析、跟踪调试等

- 应该包括时序图

此次分析所使用的server/client端代码如下所示:

#include <stdio.h> /* perror */

#include <stdlib.h> /* exit */

#include <sys/types.h> /* WNOHANG */

#include <sys/wait.h> /* waitpid */

#include <string.h> /* memset */

#include <sys/time.h>

#include <sys/types.h>

#include <unistd.h>

#include <fcntl.h>

#include <sys/socket.h>

#include <errno.h>

#include <arpa/inet.h>

#include <netdb.h> /* gethostbyname */

#define true 1

#define false 0

#define MYPORT 3490 /* 监听的端口 */

#define BACKLOG 10 /* listen的请求接收队列长度 */

int main()

{

int sockfd, new_fd; /* 监听端口,数据端口 */

struct sockaddr_in sa; /* 自身的地址信息 */

struct sockaddr_in their_addr; /* 连接对方的地址信息 */

unsigned int sin_size;

if ((sockfd = socket(PF_INET, SOCK_STREAM, 0)) == -1)

{

perror("socket");

exit(1);

}

sa.sin_family = AF_INET;

sa.sin_port = htons(MYPORT); /* 网络字节顺序 */

sa.sin_addr.s_addr = INADDR_ANY; /* 自动填本机IP */

memset(&(sa.sin_zero), 0, 8); /* 其余部分置0 */

if (bind(sockfd, (struct sockaddr *)&sa, sizeof(sa)) == -1)

{

perror("bind");

exit(1);

}

if (listen(sockfd, BACKLOG) == -1)

{

perror("listen");

exit(1);

}

/* 主循环 */

while (1)

{

sin_size = sizeof(struct sockaddr_in);

new_fd = accept(sockfd,

(struct sockaddr *)&their_addr, &sin_size);

if (new_fd == -1)

{

perror("accept");

continue;

}

printf("Got connection from %s\n",

inet_ntoa(their_addr.sin_addr));

if (fork() == 0)

{

/* 子进程 */

if (send(new_fd, "Hello, world!\n", 14, 0) == -1)

perror("send");

close(new_fd);

exit(0);

}

close(new_fd);

/*清除所有子进程 */

while (waitpid(-1, NULL, WNOHANG) > 0)

;

}

close(sockfd);

return true;

}

#include <stdio.h> /* perror */

#include <stdlib.h> /* exit */

#include <sys/types.h> /* WNOHANG */

#include <sys/wait.h> /* waitpid */

#include <string.h> /* memset */

#include <sys/time.h>

#include <sys/types.h>

#include <unistd.h>

#include <fcntl.h>

#include <sys/socket.h>

#include <errno.h>

#include <arpa/inet.h>

#include <netdb.h> /* gethostbyname */

#define true 1

#define false 0

#define PORT 3490 /* Server的端口 */

#define MAXDATASIZE 100 /* 一次可以读的最大字节数 */

int main(int argc, char *argv[])

{

int sockfd, numbytes;

char buf[MAXDATASIZE];

struct hostent *he; /* 主机信息 */

struct sockaddr_in server_addr; /* 对方地址信息 */

if (argc != 2)

{

fprintf(stderr, "usage: client hostname\n");

exit(1);

}

/* get the host info */

if ((he = gethostbyname(argv[1])) == NULL)

{

/* 注意:获取DNS信息时,显示出错需要用herror而不是perror */

/* herror 在新的版本中会出现警告,已经建议不要使用了 */

perror("gethostbyname");

exit(1);

}

if ((sockfd = socket(PF_INET, SOCK_STREAM, 0)) == -1)

{

perror("socket");

exit(1);

}

server_addr.sin_family = AF_INET;

server_addr.sin_port = htons(PORT); /* short, NBO */

server_addr.sin_addr = *((struct in_addr *)he->h_addr_list[0]);

memset(&(server_addr.sin_zero), 0, 8); /* 其余部分设成0 */

if (connect(sockfd, (struct sockaddr *)&server_addr,

sizeof(struct sockaddr)) == -1)

{

perror("connect");

exit(1);

}

if ((numbytes = recv(sockfd, buf, MAXDATASIZE, 0)) == -1)

{

perror("recv");

exit(1);

}

buf[numbytes] = ‘\0‘;

printf("Received: %s", buf);

close(sockfd);

return true;

}

1.网络分层模型

OSI模型与TCP/IP模型

开放式系统互联通信参考模型(英语:Open System Interconnection Reference Model,缩写为 OSI),简称为OSI模型(OSI model),是一种概念模型,由国际标准化组织提出,一个试图使各种计算机在世界范围内互连为网络的标准框架。

TCP/IP协议(Transmission Control Protocol/Internet Protocol,传输控制协议/网际协议)是指能够在多个不同网络间实现信息传输的协议簇。与OSI模型不同,TCP/IP模型并不仅是理论上的网络标准,而是现有的事实模型,它在一定程度上参考了OSI模型,并根据当时的现存网络发展而来。

两种模型之间的异同如下图所示:

TCP/IP协议的具体分层如下图所示:

2.LINUX中的网络子系统

Linux网络子系统提供了对各种网络标准的存取和各种硬件的支持。下图是其整体结构。其可以分为协议层和网络驱动程序,其中网络协议主要负责实现每一种可能的网络传输协议,而网络驱动程序负责与硬件通信。

3.socket的基本使用和创建过程

应用层接口

应用层位于TCP/IP协议的最上层,工作于用户空间,使用内核提供的API对底层数据结构进行操作,其提供的函数比较多样,如:

- read()/write()

- send()/recv()

- sendto()/recvfrom()

- bind()

- connect()

- accept()

- etc...

其中,应用层socket套接字遵循unix"万物皆文件"的思想,这些接口函数,最终都将使用系统调用sys_socketcall()对socket套接字进行各种各样的操作(如:申请套接字fd,发送、接受数据。)

而后,sys_socketcall()函数根据传入的参数,对这些函数进行具体的分发处理,并转去调用真正执行这些操作的函数。

套接字的创建

主要作用:

1.为上层应用提供协议无关的通用网络编程接口。

2.为下层各种协议提供族接口和机制,使具体的协议族可以注册到系统中。

主要数据结构:

- struct net_protocol_family

- struct socket

- struct sock

- struct inet_protosw

- struct proto_ops

- struct proto

在应用程序使用套接口API进行数据的发送与接受前,需要先完成SOCKET的创建工作,所使用的是各个协议族对应的sock_creat()数。

- inet_creat()

- inet6_creat()

- unix_creat()

具体流程如下图所示:

在socket创建完毕后,用户和应用程序在用户空间所能看到的只是socket对应的fd,而在内核空间中,内核保存了复杂的数据结构,并建立起他们之间的关系:

随后,用户和应用程序,通过对socket fd进行数据的读写操作,完成网络通讯。

套接口层的主要流程图如下:

完成套接口的创建后,即可对相应socket文件描述符进行相应的操作。

3.接收/发送数据过程分析

以下过程分析均假设两端已完成套接字初始化及三次握手连接过程,并均处于establish状态下进行发送/接收数据。

应用层

在建立好socket之后,可以使用send()函数或sendmsg()函数进行数据的发送工作,而后如上所述,将调用sys_socketcall(),并转调真正的系统调用,其部分源代码如下:

SYSCALL_DEFINE2(socketcall, int, call, unsigned long __user *, args)

{

//...预处理...

switch (call) {

//...其他调用...

case SYS_SEND:

err = __sys_sendto(a0, (void __user *)a1, a[2], a[3],

NULL, 0);

break;

case SYS_SENDTO:

err = __sys_sendto(a0, (void __user *)a1, a[2], a[3],

(struct sockaddr __user *)a[4], a[5]);

break;

case SYS_RECV:

err = __sys_recvfrom(a0, (void __user *)a1, a[2], a[3],

NULL, NULL);

break;

case SYS_RECVFROM:

err = __sys_recvfrom(a0, (void __user *)a1, a[2], a[3],

(struct sockaddr __user *)a[4],

(int __user *)a[5]);

break;

case SYS_SENDMSG:

err = __sys_sendmsg(a0, (struct user_msghdr __user *)a1,

a[2], true);

break;

case SYS_RECVMSG:

err = __sys_recvmsg(a0, (struct user_msghdr __user *)a1,

a[2], true);

break;

}

return err;

}

(1).数据接收

以__sys_recvfrom()为例,分析其调用过程,部分源代码如下:

int __sys_recvfrom(int fd, void __user *ubuf, size_t size, unsigned int flags,

struct sockaddr __user *addr, int __user *addr_len)

{

struct msghdr msg;

//...预处理...

//在接受数据之前,检查用户空间的地址是否可读

err = import_single_range(READ, ubuf, size, &iov, &msg.msg_iter);

//......

//通过文件描述符fd来找到需要的结构体

sock = sockfd_lookup_light(fd, &err, &fput_needed);

//......

//接收数据

err = sock_recvmsg(sock, &msg, flags);

if (err >= 0 && addr != NULL) {

//将内核空间的数据传递至用户空间

err2 = move_addr_to_user(&address,

msg.msg_namelen, addr, addr_len);

if (err2 < 0)

err = err2;

}

//更新文件的引用计数

fput_light(sock->file, fput_needed);

out:

return err;

}

其主要过程为调用如下几个函数:

其中一个关键数据结构为struct msghdr,用户端在使用sendmsg/recvmsg发送或者接收数据时,会使用msghdr来构造消息,其对应的内核结构为user_msghdr;其中msg_iov向量指向了多个数据区,msg_iovlen标识了数据区个数;在通过系统调用进入内核后,该结构中的信息会拷贝给内核的msghdr结构。

在套接字发送接收系统调用流程中,send/recv,sendto/recvfrom,sendmsg/recvmsg最终都会使用内核中的msghdr来组织数据,如下,其中msg_iter为指向数据区域的向量汇总信息,其中数据区指针可能包含一个或者多个数据区,对于send/sendto其只包含了一个数据区。

struct msghdr {

/* 指向socket地址结构 */

void *msg_name; /* ptr to socket address structure */

/* 地址结构长度 */

int msg_namelen; /* size of socket address structure */

/* 数据 */

struct iov_iter msg_iter; /* data */

/* 控制信息 */

void *msg_control; /* ancillary data */

/* 控制信息缓冲区长度 */

__kernel_size_t msg_controllen; /* ancillary data buffer length */

/* 接收信息的标志 */

unsigned int msg_flags; /* flags on received message */

/* 异步请求控制块 */

struct kiocb *msg_iocb; /* ptr to iocb for async requests */

};

代码调试验证结果如下图所示:

(2).数据发送

以__sys_sendto()为例,分析其调用过程,部分源代码如下:

int __sys_sendto(int fd, void __user *buff, size_t len, unsigned int flags,

struct sockaddr __user *addr, int addr_len)

{

struct msghdr msg;

//...预处理...

//在接受数据之前,检查用户空间的地址是否可读

err = import_single_range(WRITE, buff, len, &iov, &msg.msg_iter);

//......

//通过文件描述符fd来找到需要的结构体

sock = sockfd_lookup_light(fd, &err, &fput_needed);

//......

if (addr) {

//将用户空间的数据传递至内核空间

err = move_addr_to_kernel(addr, addr_len, &address);

//......

}

//......

//发送数据

err = sock_sendmsg(sock, &msg);

out_put:

//更新文件的引用计数

fput_light(sock->file, fput_needed);

out:

return err;

}

其主要过程为调用如下几个函数:

代码调试验证结果如下图所示:

传输层

传输层作为应用的下层,为应用层提供服务,在处理好数据之后,将数据继续向网络层发送。

本层共有两种协议,分别是面向连接的TCP协议,与无连接的UDP协议,它们对数据的收发流程并不相同,我们将分别讨论两种协议的函数调用链。

(1).TCP协议的接收流程

函数tcp_v4_rcv()定义在net/ipv4/tcp_ipv4.c中负责接收来自网络层的TCP数据包,是整个TCP协议接收数据的入口。TCP协议的数据收发状态转换如下图所示:

由于存在复杂的状态转换机制,TCP协议的实现相比于UDP要复杂的多,其部分源代码如下:

int tcp_v4_rcv(struct sk_buff *skb)

{

//...其他处理...

lookup:

sk = __inet_lookup_skb(&tcp_hashinfo, skb, __tcp_hdrlen(th), th->source,

th->dest, sdif, &refcounted);

if (!sk)

goto no_tcp_socket;

//...其他处理...

if (sk->sk_state == TCP_LISTEN) {

ret = tcp_v4_do_rcv(sk, skb);

goto put_and_return;

}

//...其他处理...

if (!sock_owned_by_user(sk)) {

skb_to_free = sk->sk_rx_skb_cache;

sk->sk_rx_skb_cache = NULL;

ret = tcp_v4_do_rcv(sk, skb);

} else {

if (tcp_add_backlog(sk, skb))

goto discard_and_relse;

skb_to_free = NULL;

}

//...其他处理...

TCP协议首先会检查套接字状态,若有已连接状态的套接字,则返回该套接字;若无套接字,则检查是否有监听状态的套接字,并作下一步处理。

若上述步骤未出错,则进入到套接字的状态检查,根据套接字的状态不同,做不同的预处理,最终进入到tcp_v4_do_rcv()函数中进行下一步处理。该处的函数调用如下:

在TCP状态自动机处理过程中,当套接字处于established状态下时,根据收到的分组是否可以快速处理,具体的路径又分为两条,部分源代码如下所示:

void tcp_rcv_established(struct sock *sk, struct sk_buff *skb)

{

//...其他处理...

if ((tcp_flag_word(th) & TCP_HP_BITS) == tp->pred_flags &&

TCP_SKB_CB(skb)->seq == tp->rcv_nxt &&

!after(TCP_SKB_CB(skb)->ack_seq, tp->snd_nxt)) {

int tcp_header_len = tp->tcp_header_len;

if (tcp_header_len == sizeof(struct tcphdr) + TCPOLEN_TSTAMP_ALIGNED) {

/* No? Slow path! */

if (!tcp_parse_aligned_timestamp(tp, th))

goto slow_path;

/* If PAWS failed, check it more carefully in slow path */

if ((s32)(tp->rx_opt.rcv_tsval - tp->rx_opt.ts_recent) < 0)

goto slow_path;

}

//...其他处理...

slow_path:

if (len < (th->doff << 2) || tcp_checksum_complete(skb))

goto csum_error;

if (!th->ack && !th->rst && !th->syn)

goto discard;

if (!tcp_validate_incoming(sk, skb, th, 1))

return;

}

代码中只展示了慢速路径的条件判断及跳转,当满足快速路径条件时,数据包将由tcp_rcv_established()函数进行快速处理。此部分函数调用如下:

在分组处理完成之后,需要将数据交给应用层,调用的是tcp_data_queue()函数,将接收并处理好的skb结构插入接收队列当中,等待应用层的处理。处理逻辑如下:

- tcp_data_queue主要把非乱序包copy到ucopy或者sk_receive_queue中,并调用tcp_fast_path_check尝试开启快速路径

- 对重传包设置dsack,并快速ack回去

- 对于乱序包,如果包里有部分旧数据也设置dsack,并把乱序包添加到ofo队列中

部分源代码如下所示:

void tcp_rcv_established(struct sock *sk, struct sk_buff *skb)

{

//...其他处理...

tcp_data_queue(sk, skb);

//...其他处理...

}

static void tcp_data_queue(struct sock *sk, struct sk_buff *skb)

{

//...执行插入队列...

tcp_data_queue_ofo(sk, skb);//对乱序包进行处理

}

代码调试验证结果如下图所示:

(2)TCP协议的发送流程

数据从应用层传递给传输层之后,TCP协议主要对数据进行以下处理:(1)构造 TCP segment (2)计算 checksum (3)发送回复(ACK)包 (4)滑动窗口(sliding windown)等保证可靠性的操作。

其入口函数是tcp_sendmsg(),主要工作是把用户层的数据,填充到skb中。然后调用tcp_push_one()来发送,tcp_push_one函数调用tcp_write_xmit()函数,其又将调用发送函数tcp_transmit_skb,所有的SKB都经过该函数进行发送。最后进入到ip_queue_xmit到网络层。因为tcp会进行重传控制,所以有tcp_write_timer函数,进行定时。

部分源代码如下所示:

int tcp_sendmsg(struct sock *sk, struct msghdr *msg, size_t size)

{

int ret;

lock_sock(sk);

ret = tcp_sendmsg_locked(sk, msg, size);

release_sock(sk);

return ret;

}

int tcp_sendmsg_locked(struct sock *sk, struct msghdr *msg, size_t size)

{

//...判断不同情况,并作处理...

if (((1 << sk->sk_state) & ~(TCPF_ESTABLISHED | TCPF_CLOSE_WAIT)) &&

!tcp_passive_fastopen(sk)) {

err = sk_stream_wait_connect(sk, &timeo);

if (err != 0)

goto do_error;

}

//...判断不同情况,并作处理...

if (forced_push(tp)) {

tcp_mark_push(tp, skb);

__tcp_push_pending_frames(sk, mss_now, TCP_NAGLE_PUSH);

} else if (skb == tcp_send_head(sk))

tcp_push_one(sk, mss_now);

//...其他处理...

}

void tcp_push_one(struct sock *sk, unsigned int mss_now)

{

struct sk_buff *skb = tcp_send_head(sk);

BUG_ON(!skb || skb->len < mss_now);

tcp_write_xmit(sk, mss_now, TCP_NAGLE_PUSH, 1, sk->sk_allocation);

}

static bool tcp_write_xmit(struct sock *sk, unsigned int mss_now, int nonagle,

int push_one, gfp_t gfp)

{

//...其他处理...

while ((skb = tcp_send_head(sk))) {

//...其他处理...

if (unlikely(tcp_transmit_skb(sk, skb, 1, gfp)))

break;

//...其他处理...

}

return !tp->packets_out && !tcp_write_queue_empty(sk);

}

static int tcp_transmit_skb(struct sock *sk, struct sk_buff *skb, int clone_it,

gfp_t gfp_mask)

{

return __tcp_transmit_skb(sk, skb, clone_it, gfp_mask,

tcp_sk(sk)->rcv_nxt);

}

static int __tcp_transmit_skb(struct sock *sk, struct sk_buff *skb,

int clone_it, gfp_t gfp_mask, u32 rcv_nxt)

{

//...构建TCP数据包结构...

err = icsk->icsk_af_ops->queue_xmit(sk, skb, &inet->cork.fl);//发送数据

if (unlikely(err > 0)) {

tcp_enter_cwr(sk);

err = net_xmit_eval(err);

}

if (!err && oskb) {

tcp_update_skb_after_send(sk, oskb, prior_wstamp);

tcp_rate_skb_sent(sk, oskb);

}

return err;

}

此部分函数调用如下:

代码调试验证结果如下图所示:

(3)UDP协议的接收流程

相较于TCP的复杂状态转换机制与可靠性要求,,UDP的无连接使得接收数据流程更加简洁。

udp_rcv是处理UDP数据包的函数,定义在net/ipv4/udp.c,该函数是 _udp4_lib_rcv函数的包装函数。入参包含套接字缓冲区。会调用_udp4_lib_lookup_skb函数在udptable中找套接字,找到则调用udp_queue_rcv_skb函数。

部分源代码如下所示:

int udp_rcv(struct sk_buff *skb)

{

return __udp4_lib_rcv(skb, &udp_table, IPPROTO_UDP);

}

int __udp4_lib_rcv(struct sk_buff *skb, struct udp_table *udptable,

int proto)

{

//...预处理...

sk = __udp4_lib_lookup_skb(skb, uh->source, uh->dest, udptable);

if (sk)

return udp_unicast_rcv_skb(sk, skb, uh);

//...其他处理...

}

static int udp_unicast_rcv_skb(struct sock *sk, struct sk_buff *skb,

struct udphdr *uh)

{

int ret;

if (inet_get_convert_csum(sk) && uh->check && !IS_UDPLITE(sk))![Alt text]

skb_checksum_try_convert(skb, IPPROTO_UDP, inet_compute_pseudo);

ret = udp_queue_rcv_skb(sk, skb);

/* a return value > 0 means to resubmit the input, but

* it wants the return to be -protocol, or 0 */

if (ret > 0)

return -ret;

return 0;

}

此部分函数调用如下:

(4)UDP协议的发送流程

相较于TCP的复杂状态转换机制与可靠性要求,,UDP的无连接使得发送数据流程更加简洁。

对于UDP的socket来说,sendto调用,真正去做工作的是udp_sendmsg这个函数,定义在net/ipv4/udp.c文件中,若数据无需corking,则直接转调用udp_send_skb()函数;若此时socket处于pending状态,则调用ip_append_data()函数将数据组织成skb并加入发送队列当中,最后通过udp_push_pending_frames()函数将处理好的数据报交付给网络层。

部分源代码如下:

int udp_sendmsg(struct sock *sk, struct msghdr *msg, size_t len)

{

//...预处理...

if (!corkreq) {

struct inet_cork cork;

skb = ip_make_skb(sk, fl4, getfrag, msg, ulen,

sizeof(struct udphdr), &ipc, &rt,

&cork, msg->msg_flags);

err = PTR_ERR(skb);

if (!IS_ERR_OR_NULL(skb))

err = udp_send_skb(skb, fl4, &cork);

goto out;

}

//...其他处理...

do_append_data:

up->len += ulen;

err = ip_append_data(sk, fl4, getfrag, msg, ulen,

sizeof(struct udphdr), &ipc, &rt,

corkreq ? msg->msg_flags|MSG_MORE : msg->msg_flags);

if (err)

udp_flush_pending_frames(sk);

else if (!corkreq)

err = udp_push_pending_frames(sk);

else if (unlikely(skb_queue_empty(&sk->sk_write_queue)))

up->pending = 0;

release_sock(sk);

//...其他处理...

}

int udp_push_pending_frames(struct sock *sk)

{

//...预处理...

err = udp_send_skb(skb, fl4, &inet->cork.base);

//...其他处理...

return err;

}

static int udp_send_skb(struct sk_buff *skb, struct flowi4 *fl4,

struct inet_cork *cork)

{

//...其他处理...

//交付给网络层

err = ip_send_skb(sock_net(sk), skb);

//...其他处理...

}

此部分函数调用如下:

网络层

网络层具体就是IP协议层,处理发送和接收数据外,还需要进行转发和路由分组。在查找最佳路由并选择适当网卡的时候也会涉及对底层地址族的处理,例如MAC地址。

(1)接收数据

ipv4数据包的主接收方法是ip_rcv()函数,会检测报到类似如果是PACKET_OTHERHOST(定义在include/uapi/linux/if_packet.h文件中)则直接丢弃。检查是否是共享的包,如果是分享的包则克隆它。获取ip头数据结构,来操作相关协议事务。然后调用NK_HOOK,NF_HOOK定义在include/linux/netfilter.h文件中。

部分源代码如下:

int ip_rcv(struct sk_buff *skb, struct net_device *dev, struct packet_type *pt,

struct net_device *orig_dev)

{

struct net *net = dev_net(dev);

skb = ip_rcv_core(skb, net);

if (skb == NULL)

return NET_RX_DROP;

return NF_HOOK(NFPROTO_IPV4, NF_INET_PRE_ROUTING,

net, NULL, skb, dev, NULL,

ip_rcv_finish);

}

static inline int

NF_HOOK(uint8_t pf, unsigned int hook, struct net *net, struct sock *sk, struct sk_buff *skb,

struct net_device *in, struct net_device *out,

int (*okfn)(struct net *, struct sock *, struct sk_buff *))

{

int ret = nf_hook(pf, hook, net, sk, skb, in, out, okfn);

if (ret == 1)

ret = okfn(net, sk, skb);

return ret;

}

它是netfilter钩子函数,如果允许包传递则返回1。如果返回其他值说明这个包被hook给消耗掉了。

其中hook为NF_INET_PRE_ROUTING(定义在include/uapi/linux/netfilter.h)

okfn指向ip_rcv_finish()函数。ip_rcv_finish()也定义在:net/ipv4/ip_input.c

static int ip_rcv_finish(struct net *net, struct sock *sk, struct sk_buff *skb)

{

struct net_device *dev = skb->dev;

int ret;

/* if ingress device is enslaved to an L3 master device pass the

* skb to its handler for processing

*/

skb = l3mdev_ip_rcv(skb);

if (!skb)

return NET_RX_SUCCESS;

ret = ip_rcv_finish_core(net, sk, skb, dev);

if (ret != NET_RX_DROP)

ret = dst_input(skb);

return ret;

}

在ip网络层中需要分段、重组的实现。须在路由选在子系统中查找,确定是发给当前主机还是转发。如果是当前主机则依次调用方法ip_local_deliver()和ip_local_deliver_finish()函数。如果需要转发则调用ip_forward()函数。部分源代码如下所示:

static int ip_local_deliver_finish(struct net *net, struct sock *sk, struct sk_buff *skb)

{

__skb_pull(skb, skb_network_header_len(skb));

rcu_read_lock();

ip_protocol_deliver_rcu(net, skb, ip_hdr(skb)->protocol);

rcu_read_unlock();

return 0;

}

int ip_local_deliver(struct sk_buff *skb)

{

/* Reassemble IP fragments. */

struct net *net = dev_net(skb->dev);

if (ip_is_fragment(ip_hdr(skb))) {

if (ip_defrag(net, skb, IP_DEFRAG_LOCAL_DELIVER))

return 0;

}

return NF_HOOK(NFPROTO_IPV4, NF_INET_LOCAL_IN,

net, NULL, skb, skb->dev, NULL,

ip_local_deliver_finish);

}

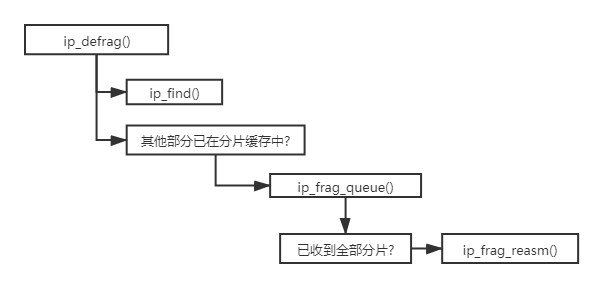

1)分片合并

IP分组可能是分片的,可以通过ip_defrag重新组合分片分组的各个部分。内核在独立的缓存中管理原本属于一个分组的各个分片,该缓存称为分片缓存(fragment cache)。属于同一个分组的各个分片保存在一个独立的等待队列中,直至该分组的所有分片到达。最终ip_frag_reasm()将各个分片重新组合起来。

部分源代码如下所示:

int ip_defrag(struct net *net, struct sk_buff *skb, u32 user)

{

//...预处理...

qp = ip_find(net, ip_hdr(skb), user, vif);

if (qp) {

int ret;

spin_lock(&qp->q.lock);

ret = ip_frag_queue(qp, skb);

spin_unlock(&qp->q.lock);

ipq_put(qp);

return ret;

}

//...后处理...

}

static int ip_frag_queue(struct ipq *qp, struct sk_buff *skb)

{

//...预处理...

if (qp->q.flags == (INET_FRAG_FIRST_IN | INET_FRAG_LAST_IN) &&

qp->q.meat == qp->q.len) {

unsigned long orefdst = skb->_skb_refdst;

skb->_skb_refdst = 0UL;

err = ip_frag_reasm(qp, skb, prev_tail, dev);//将分片组合

skb->_skb_refdst = orefdst;

if (err)

inet_frag_kill(&qp->q);

return err;

}

//...其他处理...

}

此部分函数调用如下:

2)交付传输层

如果数据是给本机的,那么返回到ip_local_deliver。调用netfilter挂钩NF_IP_LOCAL_IN恢复在ip_local_deliver_finish函数中的处理。分组的协议标识符确定一个传输层的函数,分组将传递给该函数。每个协议都有一个net_protocol结构的实例。定义在include/net/protocol.h文件中。

3)分组转发

ip分组也可能转发到另一台计算机。这就需要调用ip_forward函数。ip_forward使用NF_HOOK挂钩函数,挂钩编号为NF_INET_FORWARD,回调函数为ip_forward_finish。

部分源代码如下:

int ip_forward(struct sk_buff *skb)

{

//...其他处理...

return NF_HOOK(NFPROTO_IPV4, NF_INET_FORWARD,

net, NULL, skb, skb->dev, rt->dst.dev,

ip_forward_finish);

}

static int ip_forward_finish(struct net *net, struct sock *sk, struct sk_buff *skb)

{

struct ip_options *opt = &(IPCB(skb)->opt);

__IP_INC_STATS(net, IPSTATS_MIB_OUTFORWDATAGRAMS);

__IP_ADD_STATS(net, IPSTATS_MIB_OUTOCTETS, skb->len);

#ifdef CONFIG_NET_SWITCHDEV

if (skb->offload_l3_fwd_mark) {

consume_skb(skb);

return 0;

}

#endif

if (unlikely(opt->optlen))

ip_forward_options(skb);

skb->tstamp = 0;

return dst_output(net, sk, skb);

}

网络层接收数据的总体函数调用如下:

代码调试结果如下:

(2)发送数据

相较于接收数据包需要判断是否为本机数据包,发送数据少了很多判断流程。

当上层协议为TCP协议时,其入口函数为ip_queue_xmit(),定义在:net/ipv4/ip_output.c

上层协议为UDP协议时,入口函数如上讨论为ip_append_data()。

最终两协议函数均会调用ip_local_out()函数进行数据的发送。

网络层要选择合适的网间路由和交换结点,确保数据及时传送。其主要任务包括 (1)路由处理,即选择下一跳 (2)添加 IP header(3)计算 IP header checksum,用于检测 IP 报文头部在传播过程中是否出错 (4)可能的话,进行 IP 分片(5)处理完毕,获取下一跳的 MAC 地址,设置链路层报文头,然后转入链路层处理。

部分源代码如下:

int __ip_queue_xmit(struct sock *sk, struct sk_buff *skb, struct flowi *fl,

__u8 tos)

{

//路由寻找成功

packet_routed:

//...预处理...

res = ip_local_out(net, sk, skb);

rcu_read_unlock();

return res;

no_route:

rcu_read_unlock();

IP_INC_STATS(net, IPSTATS_MIB_OUTNOROUTES);

kfree_skb(skb);

return -EHOSTUNREACH;

}

int ip_local_out(struct net *net, struct sock *sk, struct sk_buff *skb)

{

int err;

err = __ip_local_out(net, sk, skb);

if (likely(err == 1))

err = dst_output(net, sk, skb);

return err;

}

int __ip_local_out(struct net *net, struct sock *sk, struct sk_buff *skb)

{

struct iphdr *iph = ip_hdr(skb);

iph->tot_len = htons(skb->len);

ip_send_check(iph);

/* if egress device is enslaved to an L3 master device pass the

* skb to its handler for processing */

skb = l3mdev_ip_out(sk, skb);

if (unlikely(!skb))

return 0;

skb->protocol = htons(ETH_P_IP);

return nf_hook(NFPROTO_IPV4, NF_INET_LOCAL_OUT,

net, sk, skb, NULL, skb_dst(skb)->dev,

dst_output);

}

static inline int dst_output(struct net *net, struct sock *sk, struct sk_buff *skb)

{

return skb_dst(skb)->output(net, sk, skb);

}

int ip_output(struct net *net, struct sock *sk, struct sk_buff *skb)

{

struct net_device *dev = skb_dst(skb)->dev;

IP_UPD_PO_STATS(net, IPSTATS_MIB_OUT, skb->len);

skb->dev = dev;

skb->protocol = htons(ETH_P_IP);

return NF_HOOK_COND(NFPROTO_IPV4, NF_INET_POST_ROUTING,

net, sk, skb, NULL, dev,

ip_finish_output,

!(IPCB(skb)->flags & IPSKB_REROUTED));

}

static int ip_finish_output(struct net *net, struct sock *sk, struct sk_buff *skb)

{

int ret;

ret = BPF_CGROUP_RUN_PROG_INET_EGRESS(sk, skb);

switch (ret) {

case NET_XMIT_SUCCESS:

return __ip_finish_output(net, sk, skb);

case NET_XMIT_CN:

return __ip_finish_output(net, sk, skb) ? : ret;

default:

kfree_skb(skb);

return ret;

}

}

static int __ip_finish_output(struct net *net, struct sock *sk, struct sk_buff *skb)

{

unsigned int mtu;

#if defined(CONFIG_NETFILTER) && defined(CONFIG_XFRM)

/* Policy lookup after SNAT yielded a new policy */

if (skb_dst(skb)->xfrm) {

IPCB(skb)->flags |= IPSKB_REROUTED;

return dst_output(net, sk, skb);

}

#endif

mtu = ip_skb_dst_mtu(sk, skb);

if (skb_is_gso(skb))

return ip_finish_output_gso(net, sk, skb, mtu);

if (skb->len > mtu || (IPCB(skb)->flags & IPSKB_FRAG_PMTU))

return ip_fragment(net, sk, skb, mtu, ip_finish_output2);//分片处理

return ip_finish_output2(net, sk, skb);

}

此部分函数调用如下:

代码调试结果如下:

链路层

以太网不仅可以传输IP分组,还可以传输其他协议的分组,接收系统必须有能力区分不同的协议类型,以便将数据转发到正确的例程进一步处理。因为分析数据并查明所用传输协议比较耗时,所以在以太网的帧首部包含了一个标识符,ip数据包的以太类型为0x0800,存在在以太网14字节报头中的前两个字节中。(定义在include/uapi/linux/if_ether.h,#define ETH_P_IP 0x0800 /* Internet Protocol packet */)。这些都是在链路层实现的。在链路层的帧处理由中断事件驱动。中断会将帧复制到sk_buff数据结构中。

为了正确接收和发送IP分组,链路层需要在一开始就将网络相关的部分进行初始化,主要工作是为网络协议注册中断、设置定时器、注册软中断等。此部分不细究,仅给出初始化逻辑流程图:

(1)接收数据

由于网络包的到达时间是不可预测的,所以所有现代设备驱动程序都使用中断来通知内核有包到达。现在所有的网卡都支持DMA模式,能自行将数据传输到物理内存。

net_interrupt是由设备驱动程序设置的中断处理程序。如果是分组引发(排除报告错误),则将控制转移到net_rx。net_rx创建一个套接字缓冲区,将包从网卡传输到缓冲区(物理内存),然后分析首部数据,确定包所使用的网络层协议。

然后调用netif_rx,该函数不特定于网络驱动程序。标志着控制从网卡代码转移到了网络层的通用接口部分。

netif_rx函数从设备驱动中接收一个包,将其排队给上层协议中处理。函数总是成功的。包的命运交于协议层处理,比如由于流程控制进行丢弃。将缓存投递到网络代码中。在结束之前将软中断NET_RX_SOFTIRQ(include/linux/interrupt.h)标记为即将执行,然后退出中断上下文。

softnet_data数组管理进出分组的等待队列,每个CPU都会创建等待队列,支持并发处理。softnet_data结构定义在include/linux/netdevice.h.

net_rx_action(net/core/dev.c)用作软中断的处理程序,net_rx_action调用设备的poll方法(默认为process_backlog),process_backlog函数循环处理所有分组。调用__skb_dequeue从等待队列移除一个套接字缓冲区。

调用__netif_receive_skb(net/core/dev.c)函数,分析分组类型、处理桥接,然后调用deliver_skb(net/core/dev.c),该函数调用packet_type->func使用特定于分组类型的处理程序,代码逻辑如下图所示:

关于NAPI,为了防止中断过快导致出现中断风暴,NAPI采用了IRQ和轮询的组合。实现NAPI的条件是:1.设备能够保留多个接收(例如DMA),2.设备能够禁用用于分组接收的IRQ。

代码调试结果如下:

(2)发送数据

发送时候除了特定协议需要完成的首部和校验和,以及由高层协议实例生成的数据之外,分组的路由是最重要的。在一个网卡系统下,内核也要区分发送到外部目标还是针对环回接口。

从网络层下来调用的链路层发送函数是dev_queue_xmit,直接调用__dev_queue_xmit函数,

分组放置到等待队列上一定时间之后,分组将可以发出,通过网卡的定的函数hard_start_xmit来完成,在每个net_device结构中都以函数指针形式出现,由硬件设备驱动程序实现。

此部分函数调用如下:

代码调试结果如下:

3.总结

根据上述内容,可以绘制出linux网络子系统收发包的整体流程图如下:

可以看出,在应用层,函数的调用十分简单,socket接口将底层的细节完全屏蔽,给予用户一个简单的入口,可以轻松的进行数据的收发工作。

在传输层,根据所使用协议的不同,主要实现了两种不同的协议:1)面向连接的TCP协议 2)无连接的UDP协议;两种协议的适用范围各不相同,因此最终实现的复杂度也有很大区别,但最终都将交由网络层的IP协议处理。

在网络层,IP协议的实现更加接近底层,需要处理路由查找、路由转发等工作,因此数据的收发过程并不像高层协议一样是分离开的,而是部分混杂在一起。

在链路层,将直接和硬件交流,主要通过硬中断、软中断系统实现事件驱动,此部分对于不同的硬件,处理方式可能略有不同。其中引入软中断的目的是为了解决大量中断到来时无法及时处理的问题,因此将中断分为需要快速处理的硬中断部分以及可以延后处理的软中断部分,以此来保证cpu可以最大程度的及时响应大量中断。

使用gdb对于TCP数据接收/发送调试,总体输出如下所示,可以发现在第一次调用_sys_recvfrom/_sys_sendto前,进行了许多ip层的数据接收/发送,并且在一开始的部分调用了多次 _skb_dequeue函数,这是在开机时进行的初始化工作以及唤起localhost时进行的初始化工作调用,而后在服务端/客户端建立三次握手连接时,也进行了多次IP层、数据链路层的数据接收/发送工作,验证结果符合预期。

以下为接收数据调试结果:

point 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 3, sockfd_lookup_light (fd=3, err=0xffffc900001b7e80,

fput_needed=0xffffc900001b7e84) at ./include/linux/file.h:62

62 return __to_fd(__fdget(fd));

(gdb) c

Continuing.

Breakpoint 6, fput_light (fput_needed=<optimized out>, file=<optimized out>)

at ./include/linux/file.h:30

30 if (fput_needed)

(gdb) c

Continuing.

Breakpoint 3, sockfd_lookup_light (fd=3, err=0xffffc900001b7f10,

fput_needed=0xffffc900001b7f14) at ./include/linux/file.h:62

62 return __to_fd(__fdget(fd));

(gdb) c

Continuing.

Breakpoint 6, fput_light (fput_needed=<optimized out>, file=<optimized out>)

at ./include/linux/file.h:30

30 if (fput_needed)

(gdb) c

Continuing.

Breakpoint 3, sockfd_lookup_light (fd=3, err=0xffffc900001b7e70,

fput_needed=0xffffc900001b7e74) at ./include/linux/file.h:62

62 return __to_fd(__fdget(fd));

(gdb) c

Continuing.

Breakpoint 3, sockfd_lookup_light (fd=3, err=0xffffc900001a7e80,

fput_needed=0xffffc900001a7e84) at ./include/linux/file.h:62

62 return __to_fd(__fdget(fd));

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 19, net_rx_action (h=0xffffffff82405118 <softirq_vec+24>)

at net/core/dev.c:6343

6343 {

(gdb) c

Continuing.

Breakpoint 20, process_backlog (napi=0xffff888007628a90, quota=64)

at net/core/dev.c:5848

5848 if (sd_has_rps_ipi_waiting(sd)) {

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 22, __netif_receive_skb (skb=0xffff8880064a24e0)

at net/core/dev.c:5011

5011 if (sk_memalloc_socks() && skb_pfmemalloc(skb)) {

(gdb) c

Continuing.

Breakpoint 15, ip_rcv (skb=0xffff8880064a24e0, dev=0xffff888007093000,

pt=0xffffffff8254a560 <ip_packet_type>, orig_dev=0xffff888007093000)

at net/ipv4/ip_input.c:516

516 {

(gdb) c

Continuing.

Breakpoint 16, ip_rcv_finish (net=0xffffffff824eeb80 <init_net>,

sk=0x0 <fixed_percpu_data>, skb=0xffff8880064a24e0)

at net/ipv4/ip_input.c:408

408 if (!skb)

(gdb) c

Continuing.

Breakpoint 16, ip_rcv_finish (net=<optimized out>, sk=<optimized out>,

skb=<optimized out>) at net/ipv4/ip_input.c:413

413 ret = dst_input(skb);

(gdb) c

Continuing.

Breakpoint 17, ip_local_deliver (skb=0xffff8880064a24e0)

at net/ipv4/ip_input.c:241

241 {

(gdb) c

Continuing.

Breakpoint 18, ip_local_deliver_finish (net=0xffffffff824eeb80 <init_net>,

sk=0x0 <fixed_percpu_data>, skb=0xffff8880064a24e0)

at net/ipv4/ip_input.c:228

228 __skb_pull(skb, skb_network_header_len(skb));

(gdb) c

Continuing.

Breakpoint 8, tcp_v4_rcv (skb=0xffff8880064a24e0) at net/ipv4/tcp_ipv4.c:1809

1809 {

(gdb) c

Continuing.

Breakpoint 9, __inet_lookup_skb (hashinfo=<optimized out>,

sdif=<optimized out>, refcounted=<optimized out>, dport=<optimized out>,

sport=<optimized out>, doff=<optimized out>, skb=<optimized out>)

at ./include/net/inet_hashtables.h:382

382 struct sock *sk = skb_steal_sock(skb);

(gdb) c

Continuing.

Breakpoint 10, __inet_lookup_established (net=0xffffffff824eeb80 <init_net>,

hashinfo=0xffffffff82b71980 <tcp_hashinfo>, saddr=16777343, sport=6790,

daddr=16777343, hnum=3490, dif=1, sdif=0) at net/ipv4/inet_hashtables.c:352

352 INET_ADDR_COOKIE(acookie, saddr, daddr);

(gdb) c

Continuing.

Breakpoint 11, __inet_lookup_listener (net=0xffffffff824eeb80 <init_net>,

hashinfo=0xffffffff82b71980 <tcp_hashinfo>, skb=0xffff8880064a24e0,

doff=40, saddr=16777343, sport=6790, daddr=16777343, hnum=3490, dif=1,

sdif=0) at net/ipv4/inet_hashtables.c:302

302 hash2 = ipv4_portaddr_hash(net, daddr, hnum);

(gdb) c

Continuing.

Breakpoint 12, tcp_v4_do_rcv (sk=0xffff888006028000, skb=0xffff8880064a24e0)

at net/ipv4/tcp_ipv4.c:1552

1552 if (sk->sk_state == TCP_ESTABLISHED) { /* Fast path */

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 22, __netif_receive_skb (skb=0xffff888007147000)

at net/core/dev.c:5011

5011 if (sk_memalloc_socks() && skb_pfmemalloc(skb)) {

(gdb) c

Continuing.

Breakpoint 15, ip_rcv (skb=0xffff888007147000, dev=0xffff888007093000,

pt=0xffffffff8254a560 <ip_packet_type>, orig_dev=0xffff888007093000)

at net/ipv4/ip_input.c:516

516 {

(gdb) c

Continuing.

Breakpoint 16, ip_rcv_finish (net=0xffffffff824eeb80 <init_net>,

sk=0x0 <fixed_percpu_data>, skb=0xffff888007147000)

at net/ipv4/ip_input.c:408

408 if (!skb)

(gdb) c

Continuing.

Breakpoint 16, ip_rcv_finish (net=<optimized out>, sk=<optimized out>,

skb=<optimized out>) at net/ipv4/ip_input.c:413

413 ret = dst_input(skb);

(gdb) c

Continuing.

Breakpoint 17, ip_local_deliver (skb=0xffff888007147000)

at net/ipv4/ip_input.c:241

241 {

(gdb) c

Continuing.

Breakpoint 18, ip_local_deliver_finish (net=0xffffffff824eeb80 <init_net>,

sk=0x0 <fixed_percpu_data>, skb=0xffff888007147000)

at net/ipv4/ip_input.c:228

228 __skb_pull(skb, skb_network_header_len(skb));

(gdb) c

Continuing.

Breakpoint 8, tcp_v4_rcv (skb=0xffff888007147000) at net/ipv4/tcp_ipv4.c:1809

1809 {

(gdb) c

Continuing.

Breakpoint 9, __inet_lookup_skb (hashinfo=<optimized out>,

sdif=<optimized out>, refcounted=<optimized out>, dport=<optimized out>,

sport=<optimized out>, doff=<optimized out>, skb=<optimized out>)

at ./include/net/inet_hashtables.h:382

382 struct sock *sk = skb_steal_sock(skb);

(gdb) c

Continuing.

Breakpoint 10, __inet_lookup_established (net=0xffffffff824eeb80 <init_net>,

hashinfo=0xffffffff82b71980 <tcp_hashinfo>, saddr=16777343, sport=41485,

daddr=16777343, hnum=34330, dif=1, sdif=0)

at net/ipv4/inet_hashtables.c:352

352 INET_ADDR_COOKIE(acookie, saddr, daddr);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 12, tcp_v4_do_rcv (sk=0xffff8880060288c0, skb=0xffff888007147000)

at net/ipv4/tcp_ipv4.c:1552

1552 if (sk->sk_state == TCP_ESTABLISHED) { /* Fast path */

(gdb) c

Continuing.

Breakpoint 14, tcp_fast_path_check (sk=<optimized out>)

at ./include/net/tcp.h:687

687 if (RB_EMPTY_ROOT(&tp->out_of_order_queue) &&

(gdb) c

Continuing.

Breakpoint 14, tcp_fast_path_check (sk=<optimized out>)

at ./include/net/tcp.h:691

691 tcp_fast_path_on(tp);

(gdb) c

Continuing.

Breakpoint 19, net_rx_action (h=0xffffffff82405118 <softirq_vec+24>)

at net/core/dev.c:6343

6343 {

(gdb) c

Continuing.

Breakpoint 20, process_backlog (napi=0xffff888007628a90, quota=64)

at net/core/dev.c:5848

5848 if (sd_has_rps_ipi_waiting(sd)) {

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 22, __netif_receive_skb (skb=0xffff888007147100)

at net/core/dev.c:5011

5011 if (sk_memalloc_socks() && skb_pfmemalloc(skb)) {

(gdb) c

Continuing.

Breakpoint 15, ip_rcv (skb=0xffff888007147100, dev=0xffff888007093000,

pt=0xffffffff8254a560 <ip_packet_type>, orig_dev=0xffff888007093000)

at net/ipv4/ip_input.c:516

516 {

(gdb) c

Continuing.

Breakpoint 16, ip_rcv_finish (net=0xffffffff824eeb80 <init_net>,

sk=0x0 <fixed_percpu_data>, skb=0xffff888007147100)

at net/ipv4/ip_input.c:408

408 if (!skb)

(gdb) c

Continuing.

Breakpoint 16, ip_rcv_finish (net=<optimized out>, sk=<optimized out>,

skb=<optimized out>) at net/ipv4/ip_input.c:413

413 ret = dst_input(skb);

(gdb) c

Continuing.

Breakpoint 17, ip_local_deliver (skb=0xffff888007147100)

at net/ipv4/ip_input.c:241

241 {

(gdb) c

Continuing.

Breakpoint 18, ip_local_deliver_finish (net=0xffffffff824eeb80 <init_net>,

sk=0x0 <fixed_percpu_data>, skb=0xffff888007147100)

at net/ipv4/ip_input.c:228

228 __skb_pull(skb, skb_network_header_len(skb));

(gdb) c

Continuing.

Breakpoint 8, tcp_v4_rcv (skb=0xffff888007147100) at net/ipv4/tcp_ipv4.c:1809

1809 {

(gdb) c

Continuing.

Breakpoint 9, __inet_lookup_skb (hashinfo=<optimized out>,

sdif=<optimized out>, refcounted=<optimized out>, dport=<optimized out>,

sport=<optimized out>, doff=<optimized out>, skb=<optimized out>)

at ./include/net/inet_hashtables.h:382

382 struct sock *sk = skb_steal_sock(skb);

(gdb) c

Continuing.

Breakpoint 10, __inet_lookup_established (net=0xffffffff824eeb80 <init_net>,

hashinfo=0xffffffff82b71980 <tcp_hashinfo>, saddr=16777343, sport=6790,

daddr=16777343, hnum=3490, dif=1, sdif=0) at net/ipv4/inet_hashtables.c:352

352 INET_ADDR_COOKIE(acookie, saddr, daddr);

(gdb) c

Continuing.

Breakpoint 13, tcp_data_queue (sk=0xffff888006029180, skb=0xffff888007147100)

at net/ipv4/tcp_input.c:4761

4761 {

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 5, move_addr_to_user (kaddr=0xffffc900001b7e78, klen=16,

uaddr=0x7fff50854f00, ulen=0x7fff50854ee4) at net/socket.c:212

212 BUG_ON(klen > sizeof(struct sockaddr_storage));

(gdb) c

Continuing.

Breakpoint 6, fput_light (fput_needed=<optimized out>, file=<optimized out>)

at ./include/linux/file.h:30

30 if (fput_needed)

(gdb) c

Continuing.

Breakpoint 1, __sys_recvfrom (fd=3, ubuf=0x7ffd72589880, size=100, flags=0,

addr=0x0 <fixed_percpu_data>, addr_len=0x0 <fixed_percpu_data>)

at net/socket.c:1984

1984 {

(gdb) c

Continuing.

Breakpoint 2, import_single_range (rw=0, buf=0x7ffd72589880, len=100,

iov=0xffffc900001a7e18, i=0xffffc900001a7e38) at lib/iov_iter.c:1686

1686 if (len > MAX_RW_COUNT)

(gdb) c

Continuing.

Breakpoint 3, sockfd_lookup_light (fd=3, err=0xffffc900001a7e10,

fput_needed=0xffffc900001a7e14) at ./include/linux/file.h:62

62 return __to_fd(__fdget(fd));

(gdb) c

Continuing.

Breakpoint 4, sock_recvmsg (sock=0xffff888006a22300, msg=0xffffc900001a7e28,

flags=0) at ./include/linux/uio.h:235

235 return i->count;

(gdb) c

Continuing.

Breakpoint 3, sockfd_lookup_light (fd=3, err=0xffffc900001b7e70,

fput_needed=0xffffc900001b7e74) at ./include/linux/file.h:62

62 return __to_fd(__fdget(fd));

(gdb) c

Continuing.

Breakpoint 7, __sys_sendto (fd=4, buff=0x496036, len=14, flags=0,

addr=0x0 <fixed_percpu_data>, addr_len=0) at net/socket.c:1923

1923 {

(gdb) c

Continuing.

Breakpoint 2, import_single_range (rw=1, buf=0x496036, len=14,

iov=0xffffc900001bfe18, i=0xffffc900001bfe38) at lib/iov_iter.c:1686

1686 if (len > MAX_RW_COUNT)

(gdb) c

Continuing.

Breakpoint 3, sockfd_lookup_light (fd=4, err=0xffffc900001bfe10,

fput_needed=0xffffc900001bfe14) at ./include/linux/file.h:62

62 return __to_fd(__fdget(fd));

(gdb) c

Continuing.

Breakpoint 6, fput_light (fput_needed=<optimized out>, file=<optimized out>)

at ./include/linux/file.h:30

30 if (fput_needed)

(gdb) c

Continuing.

Breakpoint 19, net_rx_action (h=0xffffffff82405118 <softirq_vec+24>)

at net/core/dev.c:6343

6343 {

(gdb) c

Continuing.

Breakpoint 20, process_backlog (napi=0xffff888007628a90, quota=64)

at net/core/dev.c:5848

5848 if (sd_has_rps_ipi_waiting(sd)) {

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 22, __netif_receive_skb (skb=0xffff8880064a24e0)

at net/core/dev.c:5011

5011 if (sk_memalloc_socks() && skb_pfmemalloc(skb)) {

(gdb) c

Continuing.

Breakpoint 15, ip_rcv (skb=0xffff8880064a24e0, dev=0xffff888007093000,

pt=0xffffffff8254a560 <ip_packet_type>, orig_dev=0xffff888007093000)

at net/ipv4/ip_input.c:516

516 {

(gdb) c

Continuing.

Breakpoint 16, ip_rcv_finish (net=0xffffffff824eeb80 <init_net>,

sk=0x0 <fixed_percpu_data>, skb=0xffff8880064a24e0)

at net/ipv4/ip_input.c:408

408 if (!skb)

(gdb) c

Continuing.

Breakpoint 16, ip_rcv_finish (net=<optimized out>, sk=<optimized out>,

skb=<optimized out>) at net/ipv4/ip_input.c:413

413 ret = dst_input(skb);

(gdb) c

Continuing.

Breakpoint 17, ip_local_deliver (skb=0xffff8880064a24e0)

at net/ipv4/ip_input.c:241

241 {

(gdb) c

Continuing.

Breakpoint 18, ip_local_deliver_finish (net=0xffffffff824eeb80 <init_net>,

sk=0x0 <fixed_percpu_data>, skb=0xffff8880064a24e0)

at net/ipv4/ip_input.c:228

228 __skb_pull(skb, skb_network_header_len(skb));

(gdb) c

Continuing.

Breakpoint 8, tcp_v4_rcv (skb=0xffff8880064a24e0) at net/ipv4/tcp_ipv4.c:1809

1809 {

(gdb) c

Continuing.

Breakpoint 9, __inet_lookup_skb (hashinfo=<optimized out>,

sdif=<optimized out>, refcounted=<optimized out>, dport=<optimized out>,

sport=<optimized out>, doff=<optimized out>, skb=<optimized out>)

at ./include/net/inet_hashtables.h:382

382 struct sock *sk = skb_steal_sock(skb);

(gdb) c

Continuing.

Breakpoint 10, __inet_lookup_established (net=0xffffffff824eeb80 <init_net>,

hashinfo=0xffffffff82b71980 <tcp_hashinfo>, saddr=16777343, sport=41485,

daddr=16777343, hnum=34330, dif=1, sdif=0)

at net/ipv4/inet_hashtables.c:352

352 INET_ADDR_COOKIE(acookie, saddr, daddr);

(gdb) c

Continuing.

Breakpoint 12, tcp_v4_do_rcv (sk=0xffff8880060288c0, skb=0xffff8880064a24e0)

at net/ipv4/tcp_ipv4.c:1552

1552 if (sk->sk_state == TCP_ESTABLISHED) { /* Fast path */

(gdb) c

Continuing.

Breakpoint 14, tcp_fast_path_check (sk=<optimized out>)

at ./include/net/tcp.h:687

687 if (RB_EMPTY_ROOT(&tp->out_of_order_queue) &&

(gdb) c

Continuing.

Breakpoint 14, tcp_fast_path_check (sk=<optimized out>)

at ./include/net/tcp.h:691

691 tcp_fast_path_on(tp);

(gdb) c

Continuing.

Breakpoint 13, tcp_data_queue (sk=0xffff8880060288c0, skb=0xffff8880064a24e0)

at net/ipv4/tcp_input.c:4761

4761 {

(gdb) c

Continuing.

Breakpoint 14, tcp_fast_path_check (sk=<optimized out>)

at ./include/net/tcp.h:687

687 if (RB_EMPTY_ROOT(&tp->out_of_order_queue) &&

(gdb) c

Continuing.

Breakpoint 14, tcp_fast_path_check (sk=<optimized out>)

at ./include/net/tcp.h:691

691 tcp_fast_path_on(tp);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 22, __netif_receive_skb (skb=0xffff888007147100)

at net/core/dev.c:5011

5011 if (sk_memalloc_socks() && skb_pfmemalloc(skb)) {

(gdb) c

Continuing.

Breakpoint 15, ip_rcv (skb=0xffff888007147100, dev=0xffff888007093000,

pt=0xffffffff8254a560 <ip_packet_type>, orig_dev=0xffff888007093000)

at net/ipv4/ip_input.c:516

516 {

(gdb) c

Continuing.

Breakpoint 16, ip_rcv_finish (net=0xffffffff824eeb80 <init_net>,

sk=0x0 <fixed_percpu_data>, skb=0xffff888007147100)

at net/ipv4/ip_input.c:408

408 if (!skb)

(gdb) c

Continuing.

Breakpoint 16, ip_rcv_finish (net=<optimized out>, sk=<optimized out>,

skb=<optimized out>) at net/ipv4/ip_input.c:413

413 ret = dst_input(skb);

(gdb) c

Continuing.

Breakpoint 17, ip_local_deliver (skb=0xffff888007147100)

at net/ipv4/ip_input.c:241

241 {

(gdb) c

Continuing.

Breakpoint 18, ip_local_deliver_finish (net=0xffffffff824eeb80 <init_net>,

sk=0x0 <fixed_percpu_data>, skb=0xffff888007147100)

at net/ipv4/ip_input.c:228

228 __skb_pull(skb, skb_network_header_len(skb));

(gdb) c

Continuing.

Breakpoint 8, tcp_v4_rcv (skb=0xffff888007147100) at net/ipv4/tcp_ipv4.c:1809

1809 {

(gdb) c

Continuing.

Breakpoint 9, __inet_lookup_skb (hashinfo=<optimized out>,

sdif=<optimized out>, refcounted=<optimized out>, dport=<optimized out>,

sport=<optimized out>, doff=<optimized out>, skb=<optimized out>)

at ./include/net/inet_hashtables.h:382

382 struct sock *sk = skb_steal_sock(skb);

(gdb) c

Continuing.

Breakpoint 10, __inet_lookup_established (net=0xffffffff824eeb80 <init_net>,

hashinfo=0xffffffff82b71980 <tcp_hashinfo>, saddr=16777343, sport=6790,

daddr=16777343, hnum=3490, dif=1, sdif=0) at net/ipv4/inet_hashtables.c:352

352 INET_ADDR_COOKIE(acookie, saddr, daddr);

(gdb) c

Continuing.

Breakpoint 12, tcp_v4_do_rcv (sk=0xffff888006029180, skb=0xffff888007147100)

at net/ipv4/tcp_ipv4.c:1552

1552 if (sk->sk_state == TCP_ESTABLISHED) { /* Fast path */

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 19, net_rx_action (h=0xffffffff82405118 <softirq_vec+24>)

at net/core/dev.c:6343

6343 {

(gdb) c

Continuing.

Breakpoint 20, process_backlog (napi=0xffff888007628a90, quota=64)

at net/core/dev.c:5848

5848 if (sd_has_rps_ipi_waiting(sd)) {

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 22, __netif_receive_skb (skb=0xffff8880064a24e0)

at net/core/dev.c:5011

5011 if (sk_memalloc_socks() && skb_pfmemalloc(skb)) {

(gdb) c

Continuing.

Breakpoint 15, ip_rcv (skb=0xffff8880064a24e0, dev=0xffff888007093000,

pt=0xffffffff8254a560 <ip_packet_type>, orig_dev=0xffff888007093000)

at net/ipv4/ip_input.c:516

516 {

(gdb) c

Continuing.

Breakpoint 16, ip_rcv_finish (net=0xffffffff824eeb80 <init_net>,

sk=0x0 <fixed_percpu_data>, skb=0xffff8880064a24e0)

at net/ipv4/ip_input.c:408

408 if (!skb)

(gdb) c

Continuing.

Breakpoint 16, ip_rcv_finish (net=<optimized out>, sk=<optimized out>,

skb=<optimized out>) at net/ipv4/ip_input.c:413

413 ret = dst_input(skb);

(gdb) c

Continuing.

Breakpoint 17, ip_local_deliver (skb=0xffff8880064a24e0)

at net/ipv4/ip_input.c:241

241 {

(gdb) c

Continuing.

Breakpoint 18, ip_local_deliver_finish (net=0xffffffff824eeb80 <init_net>,

sk=0x0 <fixed_percpu_data>, skb=0xffff8880064a24e0)

at net/ipv4/ip_input.c:228

228 __skb_pull(skb, skb_network_header_len(skb));

(gdb) c

Continuing.

Breakpoint 8, tcp_v4_rcv (skb=0xffff8880064a24e0) at net/ipv4/tcp_ipv4.c:1809

1809 {

(gdb) c

Continuing.

Breakpoint 9, __inet_lookup_skb (hashinfo=<optimized out>,

sdif=<optimized out>, refcounted=<optimized out>, dport=<optimized out>,

sport=<optimized out>, doff=<optimized out>, skb=<optimized out>)

at ./include/net/inet_hashtables.h:382

382 struct sock *sk = skb_steal_sock(skb);

(gdb) c

Continuing.

Breakpoint 10, __inet_lookup_established (net=0xffffffff824eeb80 <init_net>,

hashinfo=0xffffffff82b71980 <tcp_hashinfo>, saddr=16777343, sport=41485,

daddr=16777343, hnum=34330, dif=1, sdif=0)

at net/ipv4/inet_hashtables.c:352

352 INET_ADDR_COOKIE(acookie, saddr, daddr);

(gdb) c

Continuing.

Breakpoint 12, tcp_v4_do_rcv (sk=0xffff8880060288c0, skb=0xffff8880064a24e0)

at net/ipv4/tcp_ipv4.c:1552

1552 if (sk->sk_state == TCP_ESTABLISHED) { /* Fast path */

(gdb) c

Continuing.

Breakpoint 13, tcp_data_queue (sk=0xffff8880060288c0, skb=0xffff8880064a24e0)

at net/ipv4/tcp_input.c:4761

4761 {

(gdb) c

Continuing.

Breakpoint 14, tcp_fast_path_check (sk=<optimized out>)

at ./include/net/tcp.h:687

687 if (RB_EMPTY_ROOT(&tp->out_of_order_queue) &&

(gdb) c

Continuing.

Breakpoint 14, tcp_fast_path_check (sk=<optimized out>)

at ./include/net/tcp.h:691

691 tcp_fast_path_on(tp);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 22, __netif_receive_skb (skb=0xffff8880064a26e0)

at net/core/dev.c:5011

5011 if (sk_memalloc_socks() && skb_pfmemalloc(skb)) {

(gdb) c

Continuing.

Breakpoint 15, ip_rcv (skb=0xffff8880064a26e0, dev=0xffff888007093000,

pt=0xffffffff8254a560 <ip_packet_type>, orig_dev=0xffff888007093000)

at net/ipv4/ip_input.c:516

516 {

(gdb) c

Continuing.

Breakpoint 16, ip_rcv_finish (net=0xffffffff824eeb80 <init_net>,

sk=0x0 <fixed_percpu_data>, skb=0xffff8880064a26e0)

at net/ipv4/ip_input.c:408

408 if (!skb)

(gdb) c

Continuing.

Breakpoint 16, ip_rcv_finish (net=<optimized out>, sk=<optimized out>,

skb=<optimized out>) at net/ipv4/ip_input.c:413

413 ret = dst_input(skb);

(gdb) c

Continuing.

Breakpoint 17, ip_local_deliver (skb=0xffff8880064a26e0)

at net/ipv4/ip_input.c:241

241 {

(gdb) c

Continuing.

Breakpoint 18, ip_local_deliver_finish (net=0xffffffff824eeb80 <init_net>,

sk=0x0 <fixed_percpu_data>, skb=0xffff8880064a26e0)

at net/ipv4/ip_input.c:228

228 __skb_pull(skb, skb_network_header_len(skb));

(gdb) c

Continuing.

Breakpoint 8, tcp_v4_rcv (skb=0xffff8880064a26e0) at net/ipv4/tcp_ipv4.c:1809

1809 {

(gdb) c

Continuing.

Breakpoint 9, __inet_lookup_skb (hashinfo=<optimized out>,

sdif=<optimized out>, refcounted=<optimized out>, dport=<optimized out>,

sport=<optimized out>, doff=<optimized out>, skb=<optimized out>)

at ./include/net/inet_hashtables.h:382

382 struct sock *sk = skb_steal_sock(skb);

(gdb) c

Continuing.

Breakpoint 10, __inet_lookup_established (net=0xffffffff824eeb80 <init_net>,

hashinfo=0xffffffff82b71980 <tcp_hashinfo>, saddr=16777343, sport=6790,

daddr=16777343, hnum=3490, dif=1, sdif=0) at net/ipv4/inet_hashtables.c:352

352 INET_ADDR_COOKIE(acookie, saddr, daddr);

(gdb) c

Continuing.

Breakpoint 12, tcp_v4_do_rcv (sk=0xffff888006029180, skb=0xffff8880064a26e0)

at net/ipv4/tcp_ipv4.c:1552

1552 if (sk->sk_state == TCP_ESTABLISHED) { /* Fast path */

(gdb) c

Continuing.

Breakpoint 13, tcp_data_queue (sk=0xffff888006029180, skb=0xffff8880064a26e0)

at net/ipv4/tcp_input.c:4761

4761 {

(gdb) c

Continuing.

Breakpoint 14, tcp_fast_path_check (sk=<optimized out>)

at ./include/net/tcp.h:687

687 if (RB_EMPTY_ROOT(&tp->out_of_order_queue) &&

(gdb) c

Continuing.

Breakpoint 14, tcp_fast_path_check (sk=<optimized out>)

at ./include/net/tcp.h:691

691 tcp_fast_path_on(tp);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 22, __netif_receive_skb (skb=0xffff888007147100)

at net/core/dev.c:5011

5011 if (sk_memalloc_socks() && skb_pfmemalloc(skb)) {

(gdb) c

Continuing.

Breakpoint 15, ip_rcv (skb=0xffff888007147100, dev=0xffff888007093000,

pt=0xffffffff8254a560 <ip_packet_type>, orig_dev=0xffff888007093000)

at net/ipv4/ip_input.c:516

516 {

(gdb) c

Continuing.

Breakpoint 16, ip_rcv_finish (net=0xffffffff824eeb80 <init_net>,

sk=0x0 <fixed_percpu_data>, skb=0xffff888007147100)

at net/ipv4/ip_input.c:408

408 if (!skb)

(gdb) c

Continuing.

Breakpoint 16, ip_rcv_finish (net=<optimized out>, sk=<optimized out>,

skb=<optimized out>) at net/ipv4/ip_input.c:413

413 ret = dst_input(skb);

(gdb) c

Continuing.

Breakpoint 17, ip_local_deliver (skb=0xffff888007147100)

at net/ipv4/ip_input.c:241

241 {

(gdb) c

Continuing.

Breakpoint 18, ip_local_deliver_finish (net=0xffffffff824eeb80 <init_net>,

sk=0x0 <fixed_percpu_data>, skb=0xffff888007147100)

at net/ipv4/ip_input.c:228

228 __skb_pull(skb, skb_network_header_len(skb));

(gdb) c

Continuing.

Breakpoint 8, tcp_v4_rcv (skb=0xffff888007147100) at net/ipv4/tcp_ipv4.c:1809

1809 {

(gdb) c

Continuing.

Breakpoint 9, __inet_lookup_skb (hashinfo=<optimized out>,

sdif=<optimized out>, refcounted=<optimized out>, dport=<optimized out>,

sport=<optimized out>, doff=<optimized out>, skb=<optimized out>)

at ./include/net/inet_hashtables.h:382

382 struct sock *sk = skb_steal_sock(skb);

(gdb) c

Continuing.

Breakpoint 10, __inet_lookup_established (net=0xffffffff824eeb80 <init_net>,

hashinfo=0xffffffff82b71980 <tcp_hashinfo>, saddr=16777343, sport=6790,

daddr=16777343, hnum=3490, dif=1, sdif=0) at net/ipv4/inet_hashtables.c:352

352 INET_ADDR_COOKIE(acookie, saddr, daddr);

(gdb) c

Continuing.

Breakpoint 12, tcp_v4_do_rcv (sk=0xffff888006029180, skb=0xffff888007147100)

at net/ipv4/tcp_ipv4.c:1552

1552 if (sk->sk_state == TCP_ESTABLISHED) { /* Fast path */

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 22, __netif_receive_skb (skb=0xffff888007147000)

at net/core/dev.c:5011

5011 if (sk_memalloc_socks() && skb_pfmemalloc(skb)) {

(gdb) c

Continuing.

Breakpoint 15, ip_rcv (skb=0xffff888007147000, dev=0xffff888007093000,

pt=0xffffffff8254a560 <ip_packet_type>, orig_dev=0xffff888007093000)

at net/ipv4/ip_input.c:516

516 {

(gdb) c

Continuing.

Breakpoint 16, ip_rcv_finish (net=0xffffffff824eeb80 <init_net>,

sk=0x0 <fixed_percpu_data>, skb=0xffff888007147000)

at net/ipv4/ip_input.c:408

408 if (!skb)

(gdb) c

Continuing.

Breakpoint 16, ip_rcv_finish (net=<optimized out>, sk=<optimized out>,

skb=<optimized out>) at net/ipv4/ip_input.c:413

413 ret = dst_input(skb);

(gdb) c

Continuing.

Breakpoint 17, ip_local_deliver (skb=0xffff888007147000)

at net/ipv4/ip_input.c:241

241 {

(gdb) c

Continuing.

Breakpoint 18, ip_local_deliver_finish (net=0xffffffff824eeb80 <init_net>,

sk=0x0 <fixed_percpu_data>, skb=0xffff888007147000)

at net/ipv4/ip_input.c:228

228 __skb_pull(skb, skb_network_header_len(skb));

(gdb) c

Continuing.

Breakpoint 8, tcp_v4_rcv (skb=0xffff888007147000) at net/ipv4/tcp_ipv4.c:1809

1809 {

(gdb) c

Continuing.

Breakpoint 9, __inet_lookup_skb (hashinfo=<optimized out>,

sdif=<optimized out>, refcounted=<optimized out>, dport=<optimized out>,

sport=<optimized out>, doff=<optimized out>, skb=<optimized out>)

at ./include/net/inet_hashtables.h:382

382 struct sock *sk = skb_steal_sock(skb);

(gdb) c

Continuing.

Breakpoint 10, __inet_lookup_established (net=0xffffffff824eeb80 <init_net>,

hashinfo=0xffffffff82b71980 <tcp_hashinfo>, saddr=16777343, sport=41485,

daddr=16777343, hnum=34330, dif=1, sdif=0)

at net/ipv4/inet_hashtables.c:352

352 INET_ADDR_COOKIE(acookie, saddr, daddr);

(gdb) c

Continuing.

Breakpoint 12, tcp_v4_do_rcv (sk=0xffff8880060288c0, skb=0xffff888007147000)

at net/ipv4/tcp_ipv4.c:1552

1552 if (sk->sk_state == TCP_ESTABLISHED) { /* Fast path */

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

Breakpoint 21, __skb_dequeue (list=<optimized out>)

at ./include/linux/skbuff.h:2042

2042 struct sk_buff *skb = skb_peek(list);

(gdb) c

Continuing.

(gdb)

以下为发送数据调试结果。

Breakpoint 11, tcp_send_head (sk=<optimized out>) at ./include/net/tcp.h:1754

1754 return skb_peek(&sk->sk_write_queue);

(gdb) c

Continuing.

Breakpoint 11, tcp_send_head (sk=<optimized out>) at ./include/net/tcp.h:1754

1754 return skb_peek(&sk->sk_write_queue);

(gdb) c

Continuing.

Breakpoint 11, tcp_send_head (sk=<optimized out>) at ./include/net/tcp.h:1754

1754 return skb_peek(&sk->sk_write_queue);

(gdb) c

Continuing.

Breakpoint 6, fput_light (fput_needed=<optimized out>, file=<optimized out>)

at ./include/linux/file.h:30

30 if (fput_needed)

(gdb) c

Continuing.

Breakpoint 3, sockfd_lookup_light (fd=3, err=0xffffc900001b7e70,

fput_needed=0xffffc900001b7e74) at ./include/linux/file.h:62

62 return __to_fd(__fdget(fd));

(gdb) c

Continuing.

Breakpoint 1, __sys_sendto (fd=4, buff=0x496036, len=14, flags=0,

addr=0x0 <fixed_percpu_data>, addr_len=0) at net/socket.c:1923

1923 {

(gdb) c

Continuing.

Breakpoint 2, import_single_range (rw=1, buf=0x496036, len=14,

iov=0xffffc900001bfe18, i=0xffffc900001bfe38) at lib/iov_iter.c:1686

1686 if (len > MAX_RW_COUNT)

(gdb) c

Continuing.

Breakpoint 3, sockfd_lookup_light (fd=4, err=0xffffc900001bfe10,

fput_needed=0xffffc900001bfe14) at ./include/linux/file.h:62

62 return __to_fd(__fdget(fd));

(gdb) c

Continuing.

Breakpoint 5, sock_sendmsg (sock=0xffff888006a22000, msg=0xffffc900001bfe28)

at ./include/linux/uio.h:235

235 return i->count;

(gdb) c

Continuing.

Breakpoint 7, tcp_sendmsg (sk=0xffff888006029180, msg=0xffffc900001bfe28,

size=14) at ./include/net/sock.h:1532

1532 lock_sock_nested(sk, 0);

(gdb) c

Continuing.

Breakpoint 10, tcp_write_xmit (sk=0xffff888006029180, mss_now=32752,

nonagle=0, push_one=0, gfp=2592) at ./include/linux/timekeeping.h:154

154 return ktime_to_ns(ktime_get());

(gdb) c

Continuing.

Breakpoint 11, tcp_send_head (sk=<optimized out>) at ./include/net/tcp.h:1754

1754 return skb_peek(&sk->sk_write_queue);

(gdb) c

Continuing.

Breakpoint 12, tcp_transmit_skb (gfp_mask=<optimized out>,

clone_it=<optimized out>, skb=<optimized out>, sk=<optimized out>)

at ./include/linux/tcp.h:422

422 return (struct tcp_sock *)sk;

(gdb) c

Continuing.

Breakpoint 13, __tcp_transmit_skb (sk=0xffff888006029180,

skb=0xffff8880064a2400, clone_it=1, gfp_mask=2592, rcv_nxt=3082819330)

at net/ipv4/tcp_output.c:1019

1019 {

(gdb) c

Continuing.

Breakpoint 17, ip_queue_xmit (sk=0xffff888006029180, skb=0xffff8880064a24e0,

fl=0xffff8880060294d8) at ./include/net/inet_sock.h:288

288 return (struct inet_sock *)sk;

(gdb) c

Continuing.

Breakpoint 18, ip_local_out (net=0xffffffff824eeb80 <init_net>,

sk=0xffff888006029180, skb=0xffff8880064a24e0) at net/ipv4/ip_output.c:123

123 err = __ip_local_out(net, sk, skb);

(gdb) c

Continuing.

Breakpoint 19, dst_output (skb=<optimized out>, sk=<optimized out>,

net=<optimized out>) at ./include/net/dst.h:436

436 return skb_dst(skb)->output(net, sk, skb);

(gdb) c

Continuing.

Breakpoint 20, ip_finish_output (skb=<optimized out>, sk=<optimized out>,

net=<optimized out>) at net/ipv4/ip_output.c:318

318 return __ip_finish_output(net, sk, skb);

(gdb) c

Continuing.

Breakpoint 21, ip_finish_output2 (net=0xffffffff824eeb80 <init_net>,

sk=0xffff888006029180, skb=0xffff8880064a24e0) at net/ipv4/ip_output.c:186

186 {

(gdb) c

Continuing.

Breakpoint 22, dev_queue_xmit (skb=0xffff8880064a24e0) at net/core/dev.c:3808

3808 return __dev_queue_xmit(skb, NULL);

(gdb) c

Continuing.

Breakpoint 23, xmit_one (more=<optimized out>, txq=<optimized out>,

dev=<optimized out>, skb=<optimized out>) at net/core/dev.c:3195

3195 if (dev_nit_active(dev))

(gdb) c

Continuing.

Breakpoint 24, netdev_start_xmit (more=<optimized out>, txq=<optimized out>,

dev=<optimized out>, skb=<optimized out>)

at ./include/linux/netdevice.h:4430

4430 const struct net_device_ops *ops = dev->netdev_ops;

(gdb) c

Continuing.

Breakpoint 24, netdev_start_xmit (skb=<optimized out>, dev=<optimized out>,

more=<optimized out>, txq=<optimized out>)

at ./include/linux/netdevice.h:4435

4435 txq_trans_update(txq);

(gdb) c

Continuing.

Breakpoint 14, tcp_update_skb_after_send (sk=0xffff888006029180,

skb=0xffff8880064a2400, prior_wstamp=0) at net/ipv4/tcp_output.c:987

987 if (sk->sk_pacing_status != SK_PACING_NONE) {

(gdb) c

Continuing.

Breakpoint 11, tcp_send_head (sk=<optimized out>) at ./include/net/tcp.h:1754

1754 return skb_peek(&sk->sk_write_queue);

(gdb) c

Continuing.

Breakpoint 6, fput_light (fput_needed=<optimized out>, file=<optimized out>)

at ./include/linux/file.h:30

30 if (fput_needed)

(gdb) c

Continuing.

Breakpoint 11, tcp_send_head (sk=<optimized out>) at ./include/net/tcp.h:1754

1754 return skb_peek(&sk->sk_write_queue);

(gdb) c

Continuing.

Breakpoint 11, tcp_send_head (sk=<optimized out>) at ./include/net/tcp.h:1754

1754 return skb_peek(&sk->sk_write_queue);

(gdb) c

Continuing.

Breakpoint 13, __tcp_transmit_skb (sk=0xffff8880060288c0,

skb=0xffff888007147100, clone_it=0, gfp_mask=0, rcv_nxt=309559444)

at net/ipv4/tcp_output.c:1019

1019 {

(gdb) c

Continuing.

Breakpoint 17, ip_queue_xmit (sk=0xffff8880060288c0, skb=0xffff888007147100,

fl=0xffff888006028c18) at ./include/net/inet_sock.h:288

288 return (struct inet_sock *)sk;

(gdb) c

Continuing.

Breakpoint 18, ip_local_out (net=0xffffffff824eeb80 <init_net>,

sk=0xffff8880060288c0, skb=0xffff888007147100) at net/ipv4/ip_output.c:123

123 err = __ip_local_out(net, sk, skb);

(gdb) c

Continuing.

Breakpoint 19, dst_output (skb=<optimized out>, sk=<optimized out>,

net=<optimized out>) at ./include/net/dst.h:436

436 return skb_dst(skb)->output(net, sk, skb);

(gdb) c

Continuing.

Breakpoint 20, ip_finish_output (skb=<optimized out>, sk=<optimized out>,

net=<optimized out>) at net/ipv4/ip_output.c:318

318 return __ip_finish_output(net, sk, skb);

(gdb) c

Continuing.

Breakpoint 21, ip_finish_output2 (net=0xffffffff824eeb80 <init_net>,

sk=0xffff8880060288c0, skb=0xffff888007147100) at net/ipv4/ip_output.c:186

186 {

(gdb) c

Continuing.

Breakpoint 22, dev_queue_xmit (skb=0xffff888007147100) at net/core/dev.c:3808

3808 return __dev_queue_xmit(skb, NULL);

(gdb) c

Continuing.

Breakpoint 23, xmit_one (more=<optimized out>, txq=<optimized out>,

dev=<optimized out>, skb=<optimized out>) at net/core/dev.c:3195

3195 if (dev_nit_active(dev))

(gdb) c

Continuing.

Breakpoint 24, netdev_start_xmit (more=<optimized out>, txq=<optimized out>,

dev=<optimized out>, skb=<optimized out>)

at ./include/linux/netdevice.h:4430

4430 const struct net_device_ops *ops = dev->netdev_ops;

(gdb) c

Continuing.

Breakpoint 24, netdev_start_xmit (skb=<optimized out>, dev=<optimized out>,

more=<optimized out>, txq=<optimized out>)

at ./include/linux/netdevice.h:4435

4435 txq_trans_update(txq);

(gdb) c

Continuing.

Breakpoint 11, tcp_send_head (sk=<optimized out>) at ./include/net/tcp.h:1754

1754 return skb_peek(&sk->sk_write_queue);

(gdb) c

Continuing.

Breakpoint 2, import_single_range (rw=0, buf=0x7fff6a8df030, len=100,

iov=0xffffc900001a7e18, i=0xffffc900001a7e38) at lib/iov_iter.c:1686

1686 if (len > MAX_RW_COUNT)

(gdb) c

Continuing.

Breakpoint 3, sockfd_lookup_light (fd=3, err=0xffffc900001a7e10,

fput_needed=0xffffc900001a7e14) at ./include/linux/file.h:62

62 return __to_fd(__fdget(fd));

(gdb) c

Continuing.

Breakpoint 10, tcp_write_xmit (sk=0xffff888006029180, mss_now=32752,

nonagle=1, push_one=0, gfp=2592) at ./include/linux/timekeeping.h:154

154 return ktime_to_ns(ktime_get());

(gdb) c

Continuing.

Breakpoint 11, tcp_send_head (sk=<optimized out>) at ./include/net/tcp.h:1754

1754 return skb_peek(&sk->sk_write_queue);

(gdb) c

Continuing.

Breakpoint 12, tcp_transmit_skb (gfp_mask=<optimized out>,

clone_it=<optimized out>, skb=<optimized out>, sk=<optimized out>)

at ./include/linux/tcp.h:422

422 return (struct tcp_sock *)sk;

(gdb) c

Continuing.

Breakpoint 13, __tcp_transmit_skb (sk=0xffff888006029180,

skb=0xffff8880064a2400, clone_it=1, gfp_mask=2592, rcv_nxt=3082819330)

at net/ipv4/tcp_output.c:1019

1019 {

(gdb) c

Continuing.

Breakpoint 17, ip_queue_xmit (sk=0xffff888006029180, skb=0xffff8880064a24e0,

fl=0xffff8880060294d8) at ./include/net/inet_sock.h:288

288 return (struct inet_sock *)sk;

(gdb) c

Continuing.

Breakpoint 18, ip_local_out (net=0xffffffff824eeb80 <init_net>,

sk=0xffff888006029180, skb=0xffff8880064a24e0) at net/ipv4/ip_output.c:123

123 err = __ip_local_out(net, sk, skb);

(gdb) c

Continuing.

Breakpoint 19, dst_output (skb=<optimized out>, sk=<optimized out>,

net=<optimized out>) at ./include/net/dst.h:436

436 return skb_dst(skb)->output(net, sk, skb);

(gdb) c

Continuing.

Breakpoint 20, ip_finish_output (skb=<optimized out>, sk=<optimized out>,

net=<optimized out>) at net/ipv4/ip_output.c:318

318 return __ip_finish_output(net, sk, skb);

(gdb) c

Continuing.

Breakpoint 21, ip_finish_output2 (net=0xffffffff824eeb80 <init_net>,

sk=0xffff888006029180, skb=0xffff8880064a24e0) at net/ipv4/ip_output.c:186

186 {

(gdb) c

Continuing.

Breakpoint 22, dev_queue_xmit (skb=0xffff8880064a24e0) at net/core/dev.c:3808

3808 return __dev_queue_xmit(skb, NULL);

(gdb) c

Continuing.

Breakpoint 23, xmit_one (more=<optimized out>, txq=<optimized out>,

dev=<optimized out>, skb=<optimized out>) at net/core/dev.c:3195

3195 if (dev_nit_active(dev))

(gdb) c

Continuing.

Breakpoint 24, netdev_start_xmit (more=<optimized out>, txq=<optimized out>,

dev=<optimized out>, skb=<optimized out>)

at ./include/linux/netdevice.h:4430

4430 const struct net_device_ops *ops = dev->netdev_ops;

(gdb) c

Continuing.

Breakpoint 24, netdev_start_xmit (skb=<optimized out>, dev=<optimized out>,

more=<optimized out>, txq=<optimized out>)

at ./include/linux/netdevice.h:4435

4435 txq_trans_update(txq);

(gdb) c

Continuing.

Breakpoint 14, tcp_update_skb_after_send (sk=0xffff888006029180,

skb=0xffff8880064a2400, prior_wstamp=42621249220)

at net/ipv4/tcp_output.c:987

987 if (sk->sk_pacing_status != SK_PACING_NONE) {

(gdb) c

Continuing.

Breakpoint 11, tcp_send_head (sk=<optimized out>) at ./include/net/tcp.h:1754

1754 return skb_peek(&sk->sk_write_queue);

(gdb) c

Continuing.

Breakpoint 11, tcp_send_head (sk=<optimized out>) at ./include/net/tcp.h:1754

1754 return skb_peek(&sk->sk_write_queue);

(gdb) c

Continuing.

Breakpoint 11, tcp_send_head (sk=<optimized out>) at ./include/net/tcp.h:1754

1754 return skb_peek(&sk->sk_write_queue);

(gdb) c

Continuing.

Breakpoint 10, tcp_write_xmit (sk=0xffff8880060288c0, mss_now=32744,

nonagle=1, push_one=0, gfp=2592) at ./include/linux/timekeeping.h:154

154 return ktime_to_ns(ktime_get());

(gdb) c

Continuing.

Breakpoint 11, tcp_send_head (sk=<optimized out>) at ./include/net/tcp.h:1754

1754 return skb_peek(&sk->sk_write_queue);

(gdb) c

Continuing.

Breakpoint 12, tcp_transmit_skb (gfp_mask=<optimized out>,

clone_it=<optimized out>, skb=<optimized out>, sk=<optimized out>)

at ./include/linux/tcp.h:422

422 return (struct tcp_sock *)sk;

(gdb) c

Continuing.

Breakpoint 13, __tcp_transmit_skb (sk=0xffff8880060288c0,

skb=0xffff8880064a2600, clone_it=1, gfp_mask=2592, rcv_nxt=309559445)

at net/ipv4/tcp_output.c:1019

1019 {

(gdb) c

Continuing.

Breakpoint 17, ip_queue_xmit (sk=0xffff8880060288c0, skb=0xffff8880064a26e0,

fl=0xffff888006028c18) at ./include/net/inet_sock.h:288

288 return (struct inet_sock *)sk;

(gdb) c

Continuing.

Breakpoint 18, ip_local_out (net=0xffffffff824eeb80 <init_net>,

sk=0xffff8880060288c0, skb=0xffff8880064a26e0) at net/ipv4/ip_output.c:123

123 err = __ip_local_out(net, sk, skb);

(gdb) c

Continuing.

Breakpoint 19, dst_output (skb=<optimized out>, sk=<optimized out>,

net=<optimized out>) at ./include/net/dst.h:436

436 return skb_dst(skb)->output(net, sk, skb);

(gdb) c

Continuing.

Breakpoint 20, ip_finish_output (skb=<optimized out>, sk=<optimized out>,

net=<optimized out>) at net/ipv4/ip_output.c:318

318 return __ip_finish_output(net, sk, skb);

(gdb) c

Continuing.

Breakpoint 21, ip_finish_output2 (net=0xffffffff824eeb80 <init_net>,

sk=0xffff8880060288c0, skb=0xffff8880064a26e0) at net/ipv4/ip_output.c:186

186 {

(gdb) c

Continuing.

Breakpoint 22, dev_queue_xmit (skb=0xffff8880064a26e0) at net/core/dev.c:3808

3808 return __dev_queue_xmit(skb, NULL);

(gdb) c

Continuing.

Breakpoint 23, xmit_one (more=<optimized out>, txq=<optimized out>,

dev=<optimized out>, skb=<optimized out>) at net/core/dev.c:3195

3195 if (dev_nit_active(dev))

(gdb) c

Continuing.

Breakpoint 24, netdev_start_xmit (more=<optimized out>, txq=<optimized out>,

dev=<optimized out>, skb=<optimized out>)

at ./include/linux/netdevice.h:4430

4430 const struct net_device_ops *ops = dev->netdev_ops;

(gdb) c

Continuing.

Breakpoint 24, netdev_start_xmit (skb=<optimized out>, dev=<optimized out>,

more=<optimized out>, txq=<optimized out>)

at ./include/linux/netdevice.h:4435

4435 txq_trans_update(txq);

(gdb) c

Continuing.

Breakpoint 14, tcp_update_skb_after_send (sk=0xffff8880060288c0,

skb=0xffff8880064a2600, prior_wstamp=42744137002)

at net/ipv4/tcp_output.c:987

987 if (sk->sk_pacing_status != SK_PACING_NONE) {

(gdb) c

Continuing.

Breakpoint 11, tcp_send_head (sk=<optimized out>) at ./include/net/tcp.h:1754

1754 return skb_peek(&sk->sk_write_queue);

(gdb) c

Continuing.

Breakpoint 13, __tcp_transmit_skb (sk=0xffff888006029180,

skb=0xffff888007147100, clone_it=0, gfp_mask=0, rcv_nxt=3082819331)

at net/ipv4/tcp_output.c:1019

1019 {

(gdb) c

Continuing.

Breakpoint 17, ip_queue_xmit (sk=0xffff888006029180, skb=0xffff888007147100,

fl=0xffff8880060294d8) at ./include/net/inet_sock.h:288

288 return (struct inet_sock *)sk;

(gdb) c

Continuing.

Breakpoint 18, ip_local_out (net=0xffffffff824eeb80 <init_net>,

sk=0xffff888006029180, skb=0xffff888007147100) at net/ipv4/ip_output.c:123

123 err = __ip_local_out(net, sk, skb);

(gdb) c

Continuing.

Breakpoint 19, dst_output (skb=<optimized out>, sk=<optimized out>,

net=<optimized out>) at ./include/net/dst.h:436

436 return skb_dst(skb)->output(net, sk, skb);

(gdb) c

Continuing.

Breakpoint 20, ip_finish_output (skb=<optimized out>, sk=<optimized out>,

net=<optimized out>) at net/ipv4/ip_output.c:318

318 return __ip_finish_output(net, sk, skb);

(gdb) c

Continuing.

Breakpoint 21, ip_finish_output2 (net=0xffffffff824eeb80 <init_net>,

sk=0xffff888006029180, skb=0xffff888007147100) at net/ipv4/ip_output.c:186

186 {

(gdb) c

Continuing.

Breakpoint 22, dev_queue_xmit (skb=0xffff888007147100) at net/core/dev.c:3808

3808 return __dev_queue_xmit(skb, NULL);

(gdb) c

Continuing.

Breakpoint 23, xmit_one (more=<optimized out>, txq=<optimized out>,

dev=<optimized out>, skb=<optimized out>) at net/core/dev.c:3195

3195 if (dev_nit_active(dev))

(gdb) c

Continuing.

Breakpoint 24, netdev_start_xmit (more=<optimized out>, txq=<optimized out>,

dev=<optimized out>, skb=<optimized out>)

at ./include/linux/netdevice.h:4430

4430 const struct net_device_ops *ops = dev->netdev_ops;

(gdb) c

Continuing.

Breakpoint 24, netdev_start_xmit (skb=<optimized out>, dev=<optimized out>,

more=<optimized out>, txq=<optimized out>)

at ./include/linux/netdevice.h:4435

4435 txq_trans_update(txq);

(gdb) c