master-worker模式

https://blog.csdn.net/u012845099/article/details/78463324

Master-Worker模式是常用的并行模式之一,它的核心思想是,系统有两个进程协作工作:Master进程,负责接收和分配任务;Worker进程,负责处理子任务。当Worker进程将子任务处理完成后,结果返回给Master进程,由Master进程做归纳汇总,最后得到最终的结果。

一、什么是Master-Worker模式:

该模式的结构图:

Code Demo

https://github.com/thakreshardul/Master-worker-architecture

The submitted code implements a master-worker mechanism wherein, the master computes the summation of the the series e^x. Each term of the series is computed by a different worker which is spawned the master. The master then computes sum of all the values returned by each worker. There are four mechanisms implemented to read from the dedicated pipe assigned to each worker: 1. sequential 2. select 3. poll 4. epoll Instruction to run: Run the make file by running make. Issues faced during implementation: 1. Communication over pipes which have been duplicated on STDOUT_FILENO 2. Worker provides different values on STDOUT depending on whehter it is being called from master or run individually. 3. To optimise select, I had to remove the pipe from fd_set after it was read, so that it won't be read again. 4. Same for poll and epoll.

WebServer Master Worker模式

Web Server用于处理请求连接,

不同于上面任务提交模式, master负责部分的任务汇总计算功能,

WebServer提供这种模式, 是为了提高服务器性能,在启动后预先prefork多个worker进程, 每个进程都独立处理请求连接。

目的是充分利用CPU多核的计算资源。

nginx

此服务器是用C写成。

从下面可知, 对于每一个连接, 所有worker都去抢占连接的处理权, 谁先抢到, 谁就处理此连接。

https://zhuanlan.zhihu.com/p/96757160

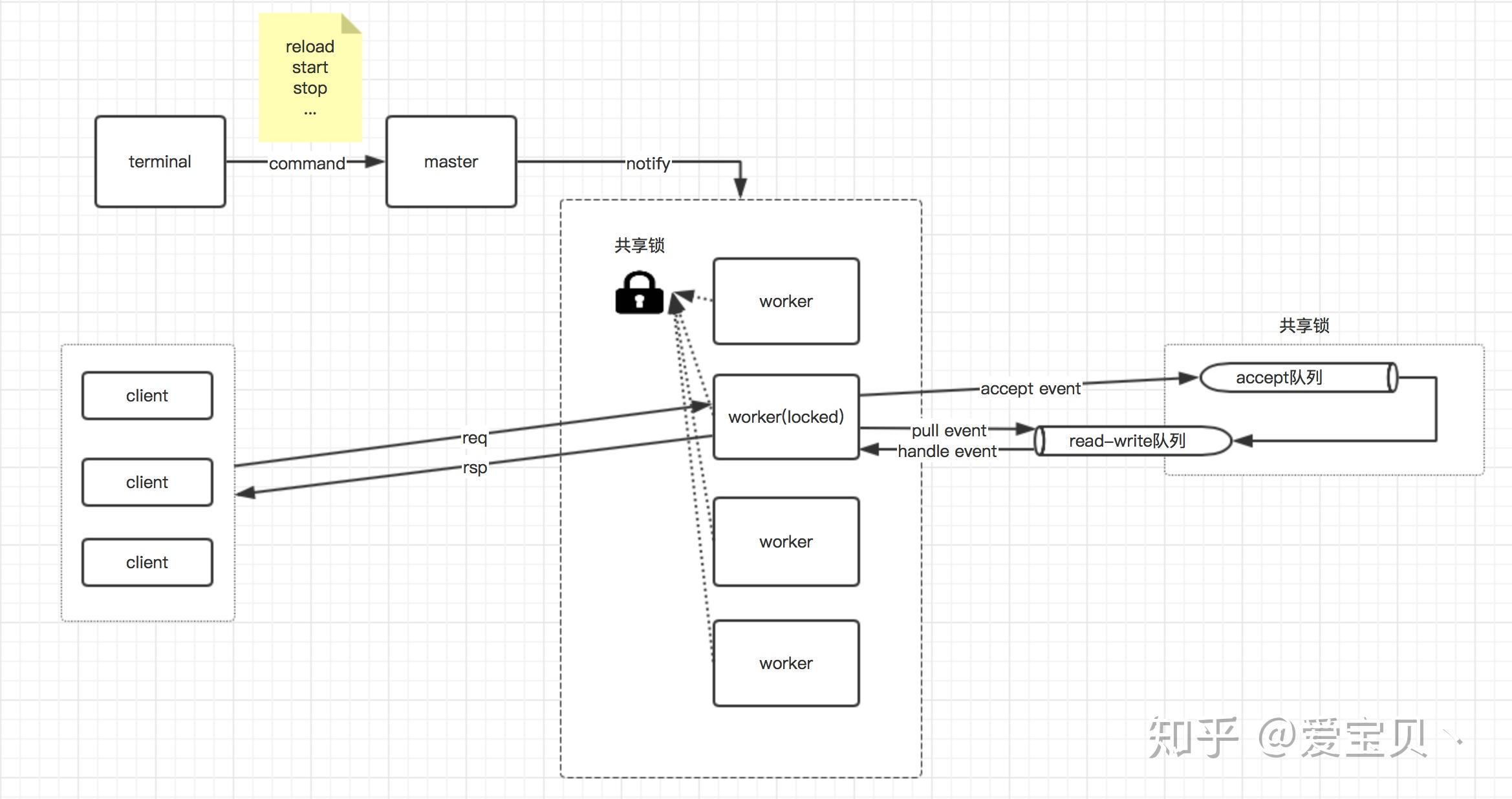

在nginx启动过程中,主进程就是master进程,该进程在启动各个worker进程之后,就会进入一个无限循环中,以处理客户端发送过来的控制指令;而worker进程则会进入一个循环中,从而不断接收客户端的连接请求以及处理请求。如下是master-worker进程模型的一个原理示意图:

从图中我们可以看出nginx工作的一般性原理:

- master进程通过接收客户端的请求,比如

-s reload、-s stop等,解析这些命令之后,通过进程间通信,将相应的指令发送到各个worker进程,从而实现对worker进程的控制;- 每个worker进程都会竞争同一个共享锁,只有竞争到共享锁的进程才能够处理客户端请求;

- 当客户端请求发送过来之后,worker进程会处理该请求的事件,如果是accept事件,则会将其添加到accept队列中,如果是read或者write事件,则会将其添加到read-write队列中;

- 在将事件添加到相应的队列中之后,在持有共享锁的情况下,nginx会处理完accept队列中的客户端连接请求,而对于read或者write事件,则会在释放锁之后直接从read-write队列中取出事件来处理。

Gunicorn

此服务器是用PYTHON写成。

master的任务就是对worker进程进行管理, 支持worker进程的动态伸缩scale。

https://zhuanlan.zhihu.com/p/102716258

Gunicorn ‘Green Unicorn’ 是一个 UNIX 下的 WSGI HTTP 服务器,它是一个 移植自 Ruby 的 Unicorn 项目的 pre-fork worker 模型。它既支持 eventlet , 也支持 greenlet

在管理 worker 上,使用了 pre-fork 模型,即一个 master 进程管理多个 worker 进程,所有请求和响应均由 Worker 处理。Master 进程是一个简单的 loop, 监听 worker 不同进程信号并且作出响应。比如接受到 TTIN 提升 worker 数量,TTOU 降低运行 Worker 数量。如果 worker 挂了,发出 CHLD, 则重启失败的 worker, 同步的 Worker 一次处理一个请求。

uwsgi

此服务器使用c写成。

其主从模式,实现在master进程实现, 创建socket,绑定bind,监听listen.

然后fork出来子进程, 在子进程中 使用accept,来抢占连接。

但是存在惊群现象, 一旦有连接到达服务端, 所有的worker都会被唤醒, 执行accept的抢占动作, 但是只有一个worker能够抢占到。

这就浪费了大量的CPU调度资源。

解决办法,对accept代码段, 添加进程锁, 同一时间只能有一个worker进程进入临界区, 执行accept。

https://uwsgi-docs.readthedocs.io/en/latest/articles/SerializingAccept.html

One of the historical problems in the UNIX world is the “thundering herd”.

What is it?

Take a process binding to a networking address (it could be

AF_INET,AF_UNIXor whatever you want) and then forking itself:int s = socket(...) bind(s, ...) listen(s, ...) fork()After having forked itself a bunch of times, each process will generally start blocking on

accept()for(;;) { int client = accept(...); if (client < 0) continue; ... }The funny problem is that on older/classic UNIX,

accept()is woken up in each process blocked on it whenever a connection is attempted on the socket.Only one of those processes will be able to truly accept the connection, the others will get a boring

EAGAIN.This results in a vast number of wasted cpu cycles (the kernel scheduler has to give control to all of the sleeping processes waiting on that socket).

This behaviour (for various reasons) is amplified when instead of processes you use threads (so, you have multiple threads blocked on

accept()).The de facto solution was placing a lock before the

accept()call to serialize its usage:for(;;) { lock(); int client = accept(...); unlock(); if (client < 0) continue; ... }For threads, dealing with locks is generally easier but for processes you have to fight with system-specific solutions or fall back to the venerable SysV ipc subsystem (more on this later).

In modern times, the vast majority of UNIX systems have evolved, and now the kernel ensures (more or less) only one process/thread is woken up on a connection event.

进程锁

https://zhuanlan.zhihu.com/p/85821779

多进程锁

- lock = multiprocessing.Lock() 创建一个锁

- lock.acquire() 获取锁

- lock.release() 释放锁

- with lock: 自动获取、释放锁 类似于 with open() as f:

特点:

谁先抢到锁谁先执行,等到该进程执行完成后,其它进程再抢锁执行